tl;dr

We are presently witnessing rapid and large-scale adoption of AI, data learning and automated decision-making systems in the Indian public sector, but in doing so, governments are failing to meet a significant pillar of good governance – transparency. In this post, we highlight the lack of institutional transparency in India’s AI-based governance projects, note why transparency is of utmost importance when it comes to new technologies like AI, and urge the National Institute of Smart Government to do its part by ensuring transparency while integrating AI-based services in the public sector under the NeGP and making existing project documents public.

Background

In the last two years, union and state governments have been deploying artificial intelligence (“AI”) based tools, portals and management systems across sectors to facilitate welfare service delivery, city planning and administration, enhanced security, surveillance, governance – the list is long and rapidly expanding. This is done with help from institutions such as the National Institute of Smart Government (“NISG”), who help implement projects envisaged under the National e-Governance Plan (“NeGP”). NISG is known to be a trusted partner for governments to transition into e-governance and empower its citizens, and identifies ‘ensuring transparency’ as one of its core values. Yet, we notice a lack of transparency across smart government projects that use emerging technology like AI, data learning, or automated decision-making.

IFF wrote to NISG flagging the general lack of transparency in the deployment of AI-based projects and interventions initiated by the union and state governments over the last two years, and urging them to take proactive measures to make project documents and updates public.

The lack of transparency

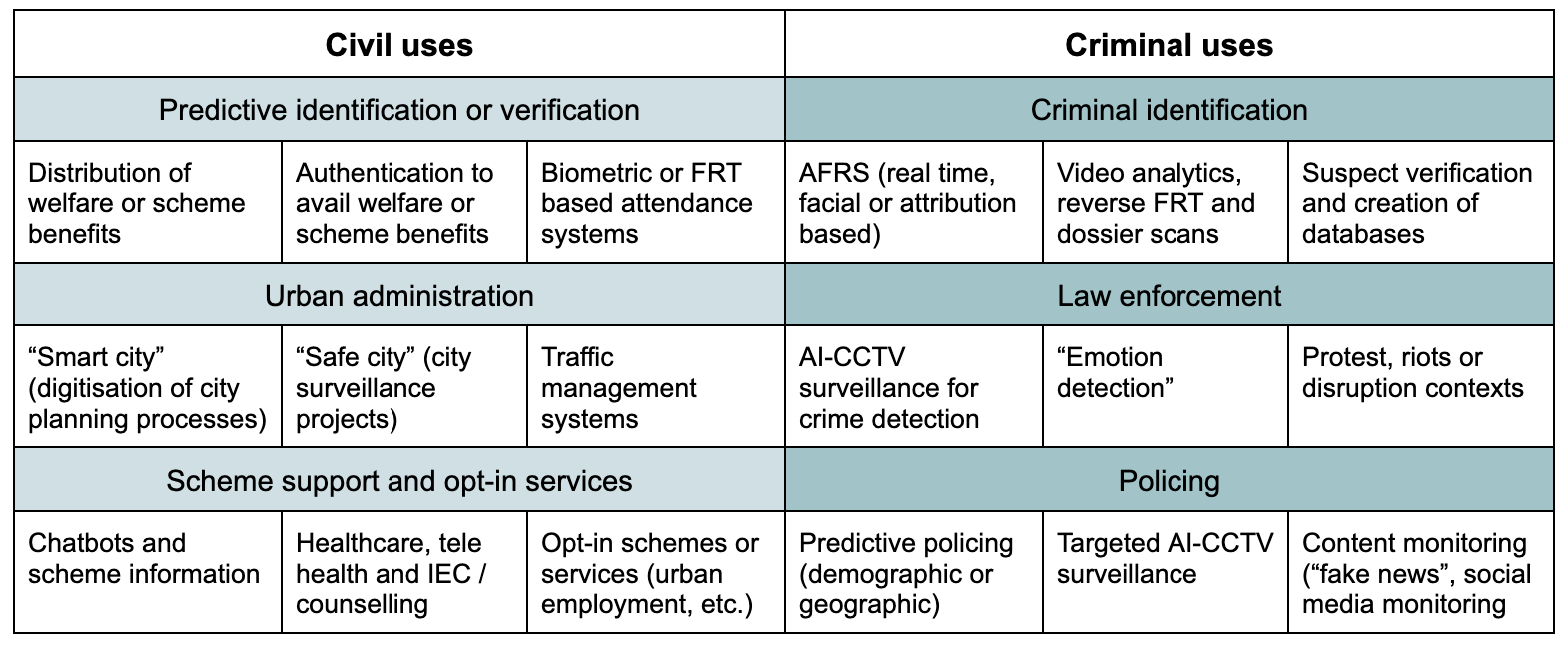

Indian governments are deploying AI and automated technologies on a large scale across sectors, spanning a number of use-cases. Our attempt at categorising use-cases is tabulated below:

Such large-scale deployment of AI by government agencies currently lacks transparency. For instance, at the city-level, AI is being used in traffic management, city planning, waste management, and other urban administration issues under several flagship “Smart City” programmes (see AI-driven smart city projects in Chandigarh, Lucknow, Pune, Agra, Chennai, Ayodhya; read more here). However, little information is available about the kind of technologies used, technology providers, identified risks and error margins, accuracy and cyber security measures taken, cost implications, accountability structure, the data collected through AI, and so on. We have two primary concerns with this manner of deployment.

Project documents not made public

First, AI is being integrated and implemented on a large scale, but it is not clear if the necessary stages of democratic policymaking are being followed. For instance, before introducing an AI-based project, a government agency may be involved in drafting proposals, initiating a tender and bidding processes, selecting and collaborating with technology providers, spending time on research, development, testing and impact assessments, initiating a pilot phase, and drawing findings and lessons from the pilot to scale up the project.

While researching and tracking this for over 10 months, we have noticed that such processes are not being made public by the involved government agencies. We have particularly noted that tenders or RFPs relating to AI technology are not uploaded on government websites, unlike other tenders which are routinely posted and updated. Thus, it is difficult to ascertain whether procedural propriety is being followed by governments and see the kind of partnerships being created between public and private authorities for the use of AI.

Stonewalling the right to information

Secondly, we have found it extremely difficult and largely unsuccessful to access such information through requests moved under the Right to Information (“RTI”) Act, 2005. In these requests, we ask for basic information which we believe should be made public – like the nature of technology used, the terms of tenders, MoUs and data sharing agreements entered between the government and technology providers, accuracy and impact assessments of the intervention, and related costs.

In our experience of seeking information on about 30 AI-based projects over a period of 10 months, we see that, for the most part, we do not receive any response from the government in the 30-day limitation period in which public authorities are mandated to furnish information under the RTI Act. The responses that we do get, fail to reveal any relevant information. To read more about our transparency efforts with AI projects, read our 2023 Transparency Report.

AI governance needs complete transparency

Moving towards smart government and integrating new technologies in the public sector is a reasonable objective. Globally, AI as a tool is seen to be ripe with possibilities and new horizons. But in keeping up with new technologies, governments must not circumvent democratic pillars of transparent and accountable policymaking. There is a need for public authorities, including the NISG, to proactively upload project information, tenders and updates on their websites. This need becomes more pressing when it comes to AI, which runs on complex, stochastic and blackbox algorithms that many may not completely understand, and the large scale in which it is being adopted across India.

Instances of algorithmic bias, exclusion, and discrimination in automated decision-making are well documented. In India, scheme beneficiaries have faced exclusion and indignity due to errors in AI-based welfare services (see here and here). Research recommends that artificial intelligence, in its present form, should not be used in welfare service delivery by the union government at all, as the datasets training the AI models are often not free from bias.

Use of AI in welfare schemes and by the police in the UK revealed three deficiencies: an algorithm used by the Department for Work and Pensions led to dozens of people having their benefits removed; a facial recognition tool used by the Metropolitan police made more mistakes recognising dark-skinned faces than light ones; an algorithm used by the Home Office to flag up sham marriages disproportionately selects people of certain nationalities.

Therefore, if union and state governments want to proceed with integrating AI across sectors, it must first invest time towards studying not only the technologies and their potential applications, but also their impact on human rights, social equity, and good governance. Second, they must make the entire process transparent.

Our ask

We urge NISG to, primarily, ensure transparency while integrating AI-based services in the public sector under the NeGP and make existing project documents public. In the long term, we hope NISG carefully reconsiders and assesses the impact of AI and new technologies on human rights and governance, and proceed in adherence with democratic principles. We remain at your disposal should you wish to discuss the matter further.

Important documents

- IFF’s letter to NISG on transparency in government-led AI projects dated 21.03.2024 (link)