tl;dr

Google (including YouTube), Facebook, Instagram (both are now under Meta), ShareChat, Snap, Twitter and WhatsApp have released their reports in compliance with Rule 4(1)(d) of the IT Rules 2021 for the month of December, 2021. The latest of these was published by Meta and was made available in late January 2022. The reports contain similar shortcomings, which exhibit lack of effort on the part of the social media intermediaries and the government to further transparency and accountability in platform governance. The intermediaries have yet again, not reported on government requests, used misleading metrics, and also have not disclosed how they use algorithms for proactive monitoring. You can read our analysis of the previous reports here.

Background

The Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021 (‘IT Rules’) require, under Rule 4(1)(d), significant social media intermediaries to publish monthly compliance reports. In these reports, they are required to:

- Mention the details of complaints received and actions taken thereon, and

- Provide the number of specific communication links or parts of information that the social media platform has removed or disabled access to by proactive monitoring.

In order to understand the impact of the IT Rules on users and the functioning of intermediaries, we examine and analyze the compliance reports published by Google (including YouTube), Facebook, Instagram, ShareChat, Snap, Twitter and WhatsApp, and the functioning of intermediaries, to capture some of the most important information for you below.

What is new this time?

We have been analysing the compliance reports published by Google (including YouTube), Facebook, Instagram, WhatsApp and Twitter since May 2021. We have found that these social media intermediaries have, barring a few minor changes, consistently followed the same format for reporting every month. Failure to raise standards of reporting despite the growing call for transparency by both state and non-state actors around the world shows lack of effort and/or interest on their part to further transparency.

For December 2021, Twitter has introduced a few new initiatives, projects, and updates to improve the ‘collective health’ of its platform. These measures include: (i) testing a new reporting process for harmful tweets to make reporting easier, (ii) testing an option to add one-time warnings to sensitive photos and videos for unsettling or sensitive content and (iii) enabling reporting of harmful Twitter Spaces on web (earlier it was only available to iOS and Android users.)

Twitter states that the new reporting process, which is currently being tested in the US, centres on a human first design. Presently, while reporting on Twitter, a user has to select which Twitter Policy is being violated. Now, in addition to just ticking a box, Twitter will allow users to describe the incident. This will trigger content-review by Twitter to identify policy violations. As per Twitter, this will also allow them to conduct an investigation to find any other related content or account that may be required to be removed.

Revelations made in the data on proactive monitoring:

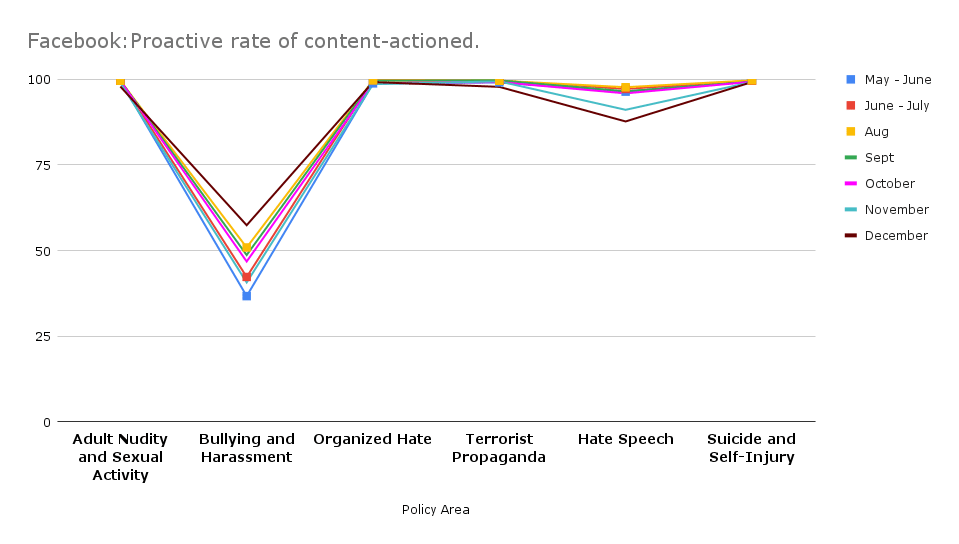

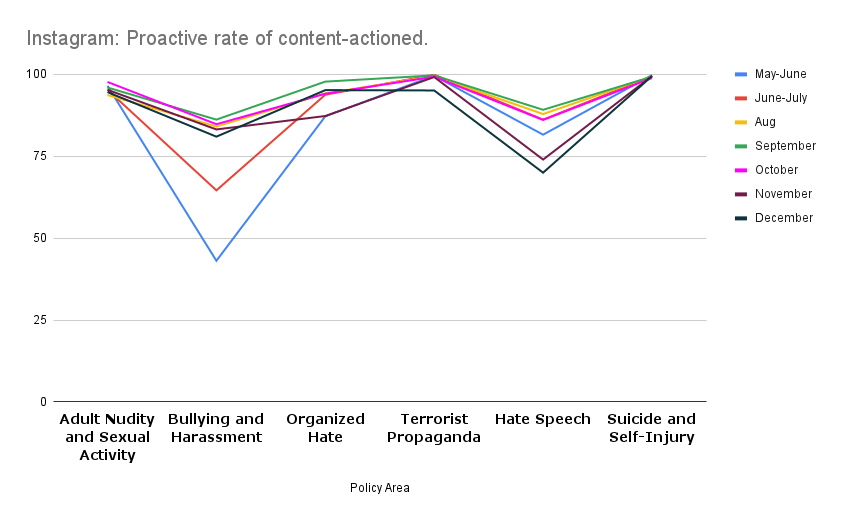

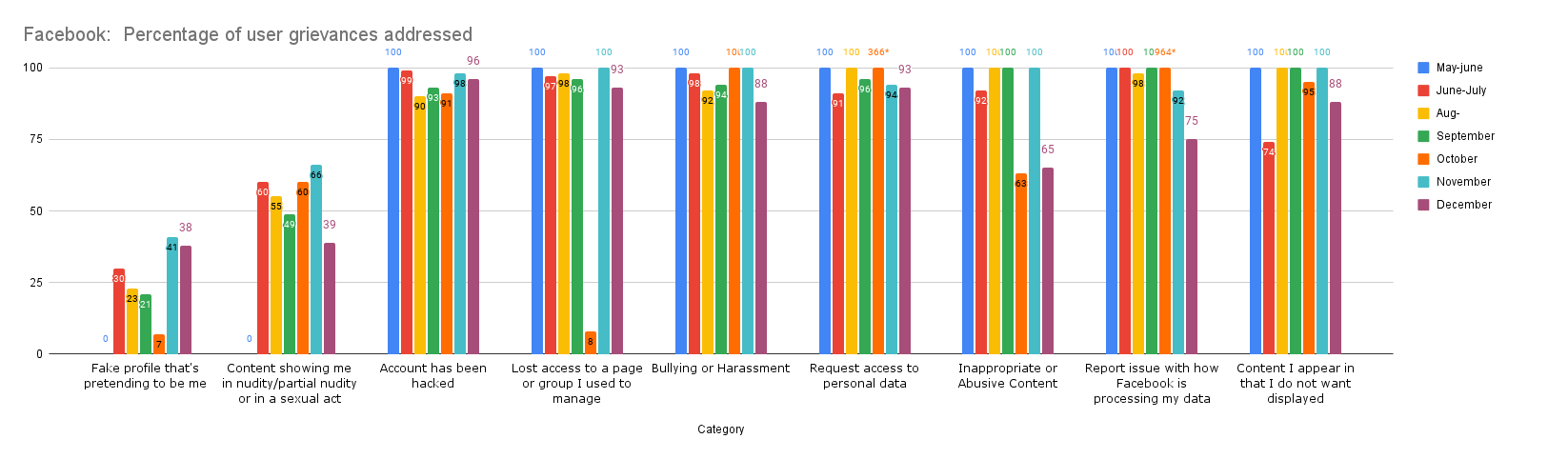

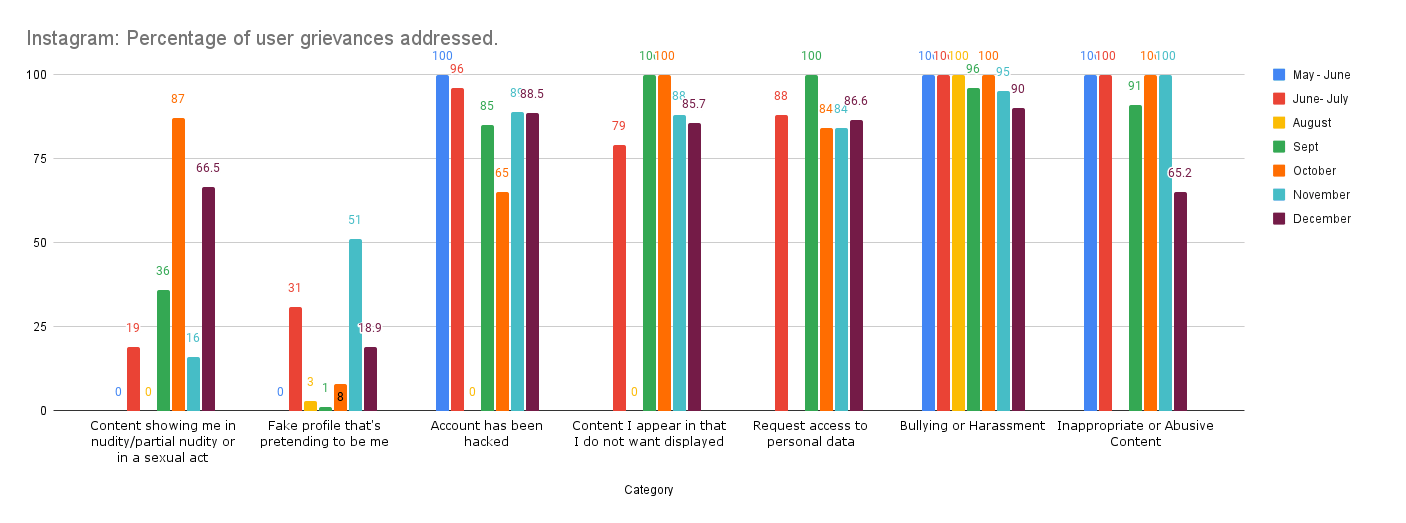

Previously, in our post we highlighted that Facebook and Instagram have not changed the metricts adopted for reporting proactive takedowns, despite disclosures made by Frances Haugen. In their reports, Facebook and Instagram adopt the metrics of (i) ‘content actioned’ which measures the number of pieces of content (such as posts, photos, videos or comments) that they take action on for going against their standards and guidelines; and (ii) proactive rate which refers to the percentage of ‘content actioned’ that they detected proactively before any user reported the same. This metric is misleading because the proactive rate only gives a percentage of that content on which action was taken, and excludes all content on Facebook (which may otherwise be an area of concern) on which action was not taken. We have written more about this in our previous post.

As per the metrics provided, the proactive rate for actioning of content for bullying and harassment has witnessed a significant increase to 57.4% in December 2021, which is the highest in comparison to the previous months. This figure, however, continues to be the lowest compared to 10 other issues where the rate is more than 91%.

Relatedly, the proactive rate for actioning content for reasons of hate speech has reduced to 87.7% in December 2021. This is a decline from previous months when the rate was at 91.9%, 95.9%, 96.5%, 97.7%, 97.2%, and 96.4%.

Data on proactive monitoring for the other Social Media Intermediaries is as follows:

- Google’s proactive removal actions, through automated detection, slightly increased to 4,05,911 in December 2021 from a steady decrease to 3,75,468 in November 2021. The figures stood at 3,84,509 in October, 4,50,246 in September and 6,51,933 in August of 2021.

- Twitter suspended 37,310 accounts on grounds of of Child Sexual Exploitation, non-consensual nudity, and similar context; and 2880 for promotion of terrorism. These numbers stood at 35,583 and 3,820, respectively, in November.

- WhatsApp banned 20,79,000 accounts in December 2021, an increase from 17,59,000 in November. The figures stood at 20,69,000 in October 2021, 22,09,000 in September, 20,70,000 in August, 30,27,000 between June 16 - July 31, 2021, and 20,11,000 between May 15 - June 15 of 2021. WhatsApp bans are enforced as a result of their abuse detection process which consists of three stages - detection at registration, during messaging, and in response to negative feedback, which it receives in the form of user reports and blocks.

What does the data on the complaints received reveal?

*The data seems to be incorrect as the number of grievances addressed is more than the number of grievances received

In the case of Google, 97.2% of the complaints received and almost 100 % of content removal action was taken for reasons of copyright and trademark infringement. This is around the same as the last report where 99.7% of such content was taken down. Other reasons for content removal included, court order (37), graphic sexual content (3), counterfeit (1) and circumvention (1). We have written about the trend of ‘complaint bombing’ of content for copyright infringement on YouTube to suppress dissent and criticism here. Content removals based on court orders slightly decreased (37) after an increase in November, 2021 (56). The figure stood at 49 in October, 2021 and at 10, 12, 4, 6, and nil since the addition of this parameter to the report in May 2021.

For Twitter, the largest number of complaints received related to abuse/harassment (35), followed by hateful conduct (12), defamation (11), I.P. related infringement (9), and misinformation (7). The largest number of URLs actioned related to illegal activities (404), followed by sensitive adult content (60) and I.P. related infringement (27). The number of complaints received for hateful conduct has decreased to 12 in December, 2021 from 13 in November 2021, after it increased to 25 in October, 2021 from 6, 0, 12 and 0 in the previous months.

What continues to be astounding is that zero complaints were received by Twitter for content promoting suicide, and for child sexual exploitation content. It is important to note here that India is a country with 24.45 million Twitter users. The smaller number of monthly complaints received on such prominent issues may indicate two possibilities: (i) the grievance redressal system is turning out to be ineffective, and (ii) Twitter is able to takedown most of such content proactively. Unless there are disclosures claiming the contrary, or audits are conducted into the platform governance operations of these social media intermediaries, there is no easy way to know which possibility weighs more.

For WhatsApp, 528 reports were received in total, out of which Whatsapp took action in 24 cases, all of which other than one action in the category of ‘Other Support’, were related to ban appeals i.e. appeals against banning of accounts.

Reports by ShareChat, LinkedIn and Snap Inc.

ShareChat in its report for December 2021, unlike other social media intermediaries, provides the number of requests from law enforcement authorities and investigating agencies. 12 such requests were received in December 2021, out of which user data was provided in 9 requests. Content was also taken down in 3 of these cases for violation of ShareChat’s community guidelines.

56,18,870 user complaints (as against 64,72,386 last month) were received which have been segregated into 22 reasons in the report. The category for abusive language and others, which was removed last month, is back. ShareChat takes two kinds of removal actions: takedowns and bans. As per the report, takedowns are either proactive (on the basis of community guidelines and other policy standard violations) or based on user complaints. Proactive takedown/deletion for December 2021 included chatrooms deletion (1,162), copyright takedowns (77,941), takedowns for reasons of comments deletion (1,30,259), user generated sexual content (2,41,939), and other user generated content (47,18,374).

ShareChat imposes three kinds of bans: (i) a UGC ban, where the user is unable to post any content on the platform for the specified ban duration; (ii) an edit profile ban, where the user is unable to edit any profile attributes for the specified time-period; and (iii) a comment ban, where the user is banned from commenting on any post on the platform for the specified ban duration. The duration of these bans can be 1 day, 7 days, 30 days or 260 days. In case of repeated breach of guidelines, user accounts are permanently removed for 360 days. As a result, 16,920 accountants were permanently terminated in December 2021.

Snap Inc. received 58,147 content reports through Snap's in-app reporting mechanism in December 2021. In 11,790 cases the content was enforced, and 7,224 unique accounts were enforced. Most reports related to sexually explicit content (23,813), followed by impersonation (16,965), spam (5,272), violence/harm (4,491), harassment and bullying (3,857), regulated goods (3,114) and hate speech (635).

LinkedIn’s transparency reports contain global summaries of its community report and government request report. With respect to India, LinkedIn received 30 requests for member data from the government in 2021.

Existing issues with the compliance reports

The following issues undermine the objective of transparency sought to be achieved by these reports since their inception, and continue to persist in the reports for December 2021:

- Algorithms for proactive takedown: Social media intermediaries are opaque about the process/algorithms followed by them for proactive takedown of content. Only WhatsApp has explained how it proactively takes down content by releasing a white paper which discusses its abuse detection process in detail. The lack of transparency about human intervention in terms of monitoring the kind of content that is taken down continues to be a concern. The IT Rules provide that social media intermediaries shall implement mechanisms for appropriate human oversight of measures deployed for proactive monitoring which includes a periodic review of any automated tools. None of the social media intermediaries have reported on such periodic review.

- Lack of uniformity: There is a lack of uniformity in reporting by the major social media intermediaries. Each intermediary has adopted its own format, and provided different policy areas or issues on which it takes down content. The lack of uniformity is evidenced from the following: (i) the IT Rules were issued following a call for attention for “misuse of social media platforms and spreading of fake news”, but there seems to be no data disclosure on content takedown for fake news by any social media intermediaries other than Twitter, (ii) Google and WhatsApp have not segregated the proactive action taken into different kinds of issues, but have provided the total number of proactive actions taken by them, (iii) Twitter has identified only 2 broad issues for proactive takedowns as opposed to 10 issues identified by Facebook and Instagram. Lack of uniformity makes it difficult for the government to understand the different kinds of concerns (as well as their extent) associated with the use of social media by Indian users.

- No disclosure of government removal requests: Even though compliance with the IT Rules does not mandate disclosure of how many content removals requests were made by the government, in order to truly advance transparency in the digital life of Indians, it is imperative that Social Media Intermediaries disclose, in their compliance reports, issue-wise government requests for content removal on their platforms.

How can the Social Media Intermediaries improve their reporting in India?

The Intermediaries can take the following steps while submitting their compliance reports under Rule 4(1)(d) of the IT Rules:

- Change the reporting formats : The social media intermediaries should endeavour to be truly transparent in their reporting in the compliance reports. They have been following a cut-copy-paste format from month to month showing little to no effort in overcoming the shortcomings and opacity in their reports. They must adhere to international best practices (discussed below) and make incremental attempts to tailor their compliance reports to further transparency in platform governance and content moderation.

- Santa Clara Principles must be adhered to: The social media intermediaries must incorporate the Santa Clara Principles On Transparency and Accountability in Content Moderation to their letter and spirit. The operational principles of version 2.0 focus on numbers, notice and appeal. The first part on ‘number’ suggests how data can be segregated by category of rule violated, provides for special reporting requirements for decisions made with the involvement of state actors, how to report on the flags received, and parameters for increasing transparency around the use of automated decision-making.

The ongoing debate about control and regulations...

The government during the first week of February claimed that it was open to implementing stricter regulations for social media intermediaries. Recently, in response to a question in the Lok Sabha on whether the government planned to bring more regulations to prevent misuse of social media, the Minister of Electronics and Information Technology, Ashwini Vaishnaw, agreed that there was a need for more accountability through regulation. While the Ld. Minister has claimed that social media intermediaries have been following the IT Rules and publishing their monthly reports, he has failed to acknowledge, act on and respond to the inadequacies in reporting.

What more can the Government do?

Transparency by social media intermediaries in the compliance reports will enable the government to collect and analyse India-specific data which would further enable well-rounded regulation. For this, complete disclosure by the social media intermediaries is imperative. The Rules must be suitably amended to achieve transparency in a systematic manner. This can be achieved by prescribing a specific format and standard for reporting. The Santa Clara Principles can be used as a starting point in this regard.

We will be back next month with a fresh set of analysis of reports. Stay tuned

Important Documents

- WhatsApp’s IT Rules compliance report for December 2021. (link)

- Google’s IT Rules compliance report for December 2021. (link)

- Facebook’s and Instagram’s IT Rules compliance report for December 2021. (link)

- Twitter’s compliance report for December 2021. (link)

- ShareChat’s IT Rules compliance report for December 2021. (link)

- LinkedIn’s Government Requests Report for 2021. (link)

- Snap’s compliance Report for December 2021. (link)

- Our analysis of previous compliance reports. (link)