tl;dr

Google (including YouTube), Facebook, Instagram, WhatsApp, and Twitter have released their reports in compliance with Rule 4(1)(d) of the Information Technology (Guidelines for Intermediaries and Digital Media Ethics Code) Rules, 2021 for the month of September, 2021. The latest of these reports was made available in November 2021. The reports continue to suffer from the same deficiencies - lack of reporting on government requests, use of misleading metrics, and lack of transparency on algorithms used for proactive monitoring. You can read our analysis of the previous reports here. We have also analysed the compliance report of ShareChat and transparency reports of LinkedIn and Snap this time!

Background

The Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021 (IT rules) require, under Rule 4(1)(d), significant social media intermediaries ('SSMI') to publish monthly compliance reports. In these reports, they are required to:

- Mention the details of complaints received and actions taken thereon, and

- Provide the number of specific communication links or parts of information that the social media platform has removed or disabled access to by proactive monitoring.

Google (including YouTube), Facebook, Instagram, WhatsApp and Twitter have released their compliance reports for the month of September recently. To understand the impact of the IT Rules on users and the functioning of intermediaries, we examine these reports to capture some of the most important information for you below.

How is proactive monitoring being done?

The reports continue to lack true transparency as the SSMIs have failed to report about the process/algorithms followed by them for proactive takedown of content. Read more about this in the analysis of the August reports here.

What does the data on proactive monitoring reveal?

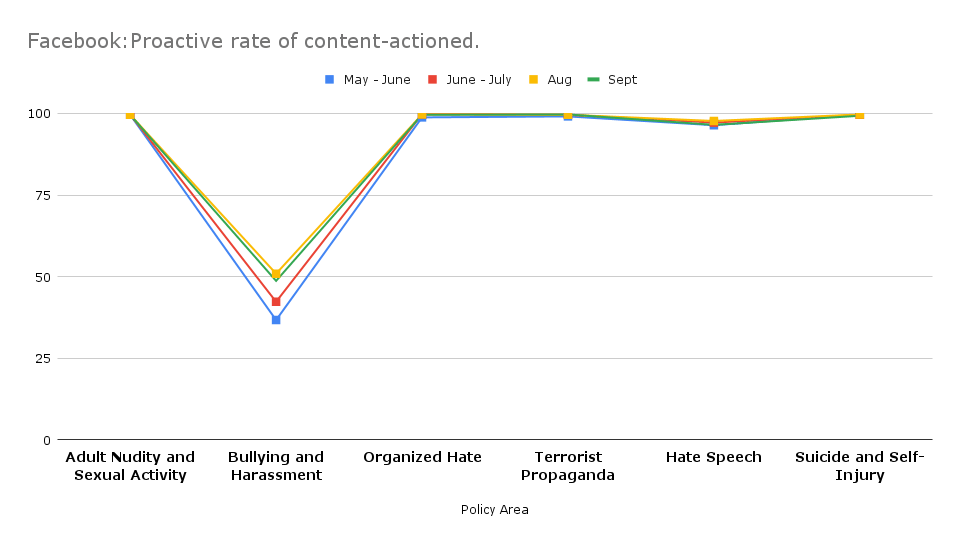

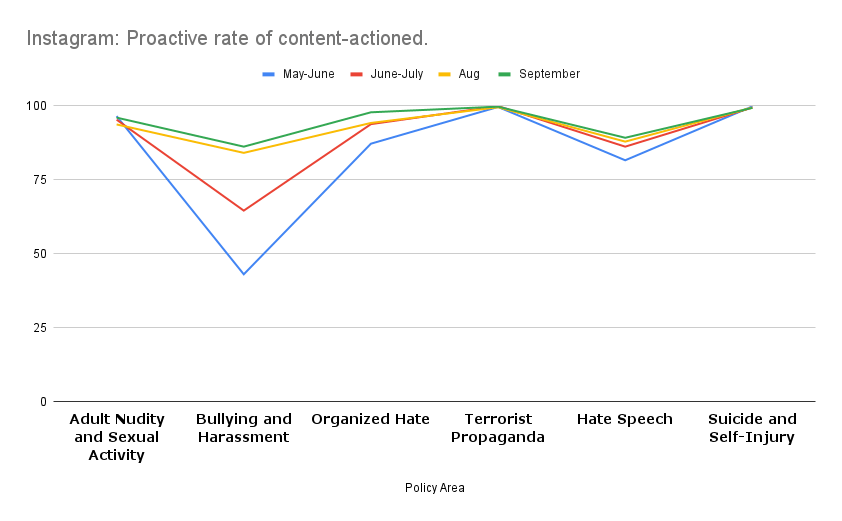

As per its reports (which now has a Meta logo instead!), Facebook and Instagram adopt the metrics of (i) ‘content actioned’ which measures the number of pieces of content (such as posts, photos, videos or comments) that they take action on for going against their standards and guidelines, (ii) proactive rate which refers to the percentage of ‘content actioned’ that they detected proactively before any user reported for the same. This metric is problematic because the proactive rate only gives a percentage of that content on which action was taken, and excludes all content on Facebook (which may otherwise be an area of concern) on which action was not taken. This problem in the metric becomes a glaring concern in light of the documents leaked by Frances Haugen which show that Facebook has boasted of proactive removal of over 90% of identified hate speech in its “transparency reports” when internal records showed that “as little as 3-5% of hate” speech was actually removed. These documents confirm what civil society organizations have been asserting for years, that Facebook has been fueling hate speech around the world because of its failure to moderate content and its use of algorithms to amplify inflammatory content.

Be that as may, as per the metrics provided, the proactive rate for actioning of content for bullying and harassment still stands at the lowest at 48.7% which has fallen from last month’s 50.9%. This figure is particularly low as compared to 8 other issues (including hate speech and violent content) where the rate is more than 96%. This means that maximum user complaints were received under this category, and that Facebook is consistently failing to curb the menace of bullying and harassment.

Data on proactive monitoring for the other SSMIs is as follows:

- Google proactively took an increasing number of 4,50,246 6,51,933 removal actions as a result of automated detection in Sept, as opposed to 6,51,933 in August and 5,76,892 in July.

- Twitter suspended 25,500 accounts because of Child Sexual Exploitation, non-consensual nudity, and similar context; and 4,790 because of promotion of terrorism. These numbers stood at 26,726 and 4,648, respectively, in August.

- WhatsApp banned 22,09,000 in Sept as opposed to 20,70,000 in August, 30,27,000 between June 16 - July 31, 2021, and 20,11,000 between May 15 - Jun 15, 2021.

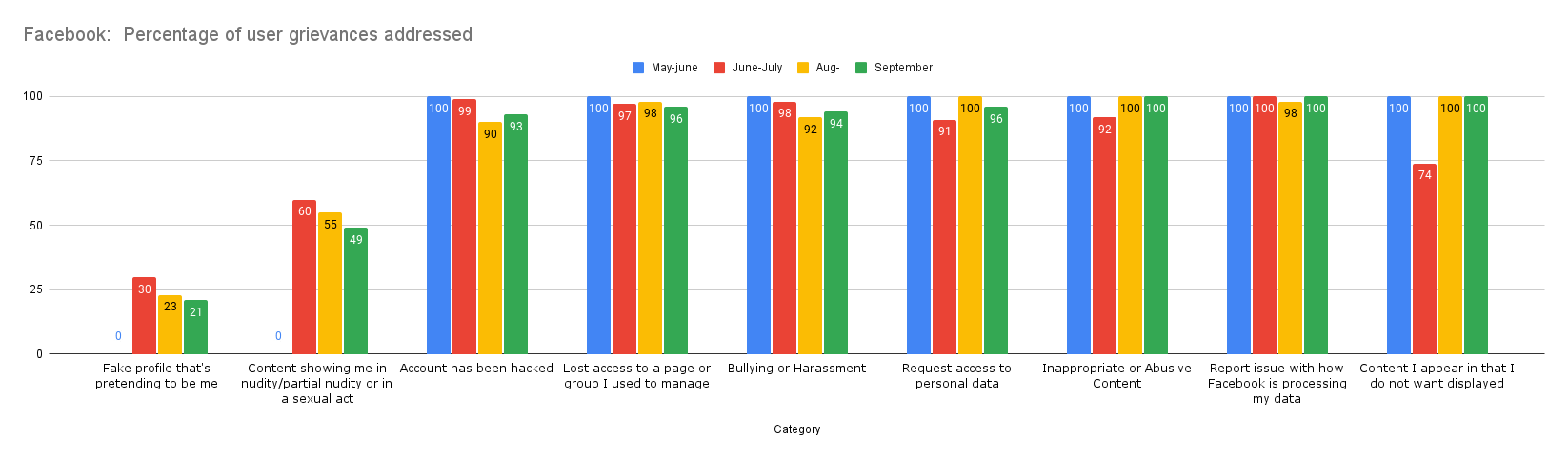

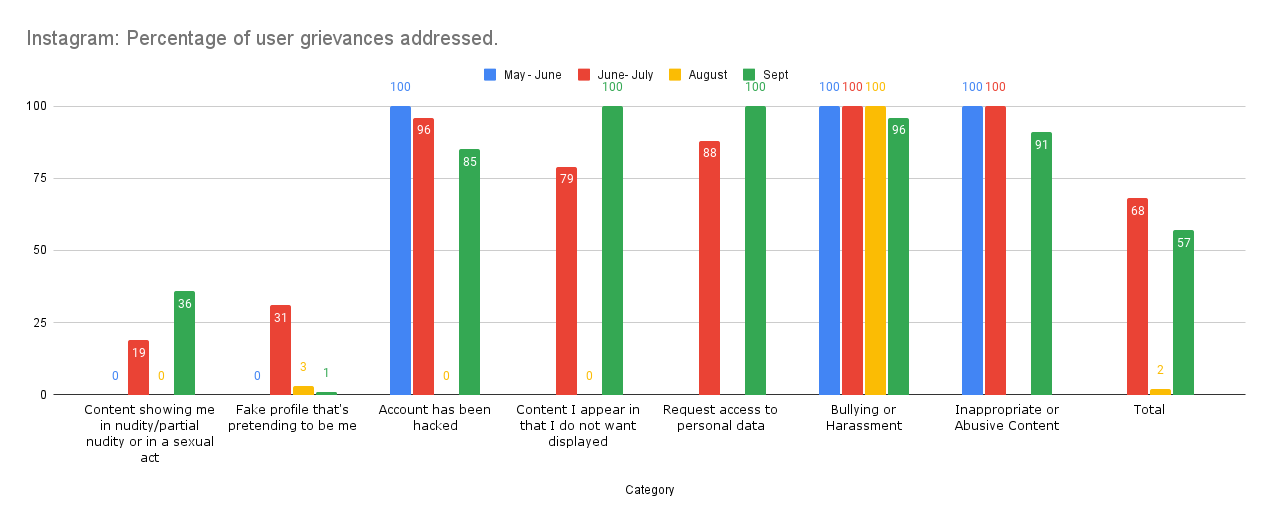

What does the data on the complaints received reveal?

In the case of Google, 97.1% of the complaints received and 99.9% of content removal action was taken for reasons of copyright and trademark infringement. This is no different from the last report where also 99.9% of such content was taken down. Other reasons for content removal included graphic sexual content (11), court order (10), counterfeit (5), defamation (2), and impersonation (2). A recent trend of ‘complaint bombing’ content for copyright infringement on YouTube is being seen in India which is being misused to suppress dissent and criticism. We have written more about it here.

For Twitter, the maximum number of complaints received related to defamation (304) followed by abuse/harassment, impersonation and misinformation, and the maximum number of URLs actioned related to abuse/harassment (126). This time, again, there were zero complaints received for content promoting suicide, and terrorrism, and only 6 complaints for hateful conduct and 1 complaint for child sexual exploitation content. It is important to note here that India is a country with 22.1 million Twitter users, and less number of monthly complaints received on such prominent issues may be attributable to an ineffective grievance redressal system.

For WhatsApp, 560 reports were received in total out of which action was taken in 51 cases, 50 of which were related to ban appeals i.e. appeals against banning of accounts.

What are the major policy areas/issues under which content is taken down?

There is a lack of consistency in how much detail SSMI’s provide in their compliance reports. Each SSMI has also adopted its own format and metric, and has provided different policy areas or issues on which it takes down content. Other than Facebook and Instagram identifying “other issues”as a new category in the data for grievances received and action taken in September, there is no change in segregation of data into reasons. Read more about this in the analysis of the August reports here.

Bonus: Reports by ShareChat, LinkedIn and Snap Inc.

ShareChat in its report for Sept 2021 has, unlike other SSMIs, has provided the number of requests from law enforcement authorities and investigating agencies at 5, out of which user data was provided in 4 requests and takedown action was taken on none. 95,39,000 user complaints were received which have been segregated into 22 reasons in the report. Two kinds of removal actions have been taken by ShareChat: takedowns and bans. As per the report, takedowns are reither proactive (on the basis of community guidelines violations) or based on user complaints. Proactive takedown for September included - copyright takedown (51,973), sexual user generated content discard (270,107), user generated content discard (2,824,150), chatrooms deleted (2,783) and comments deleted (149,331).

ShareChat imposes three kinds of bans: UGC ban (user is unable to post any content on the platform for the specified ban duration), edit profile ban (user is unable to edit any profile attributes for the specified time-period) and comment ban (user is banned from commenting on any post on the platform for the specified ban duration). The duration of these bans can be 1 day, 7 days, 30 days or 260 days. In case of repeated breach of guidelines, user accounts are permanently removed for 360 days. 89,159 accountants were permanently terminated in September.

LinkedIn and Snap Inc. have transparency reports containing global summaries updated on their websites. With respect to India, 6 ‘International Government Information Requests’, 22 account/content violation takedowns and 1 CSAM takedown have been identified for the period July 1 to December 31, 2020 by Snap Inc. LinkedIn received 29 requests for member data by the government between July and December 2020.

How can the SSMIs improve their reporting in India?

The SSMIs have failed to incorporate the Santa Clara Principles On Transparency and Accountability in Content Moderation to their letter and spirit. While the SSMIs have attempted to adhere to these principles, there is still a long way to go. Google, WhatsApp and Twitter, especially, have failed to adhere to the first principle which requires segregation of data by category of rule violated, by format of content at issue (e.g., text, audio, image, video, live stream), by source of flag (e.g., governments, trusted flaggers, users, different types of automated detection) and by locations of flaggers and impacted users (where apparent). ShareChat, on the other hand, has divided the data and has provided the number of requests from law enforcement authorities and investigating agencies, and has segregated removal of content into 22 reasons. Further, SSMI’s have again failed to provide an explanation of how automated detection is used across each category of content.

The IT Rules were floated following a call for attention for “misuse of social media platforms and spreading of fake news”, but there seems to be no data disclosure on content takedown for fake news in the compliance reports submitted by any SSMI other than Twitter.

The reports, again, do not provide content removal requests made by the government. As revealed in the Lok Sabha yesterday, 9849 accounts/URLs were ordered to be blocked by the government in 2020 alone. In order to truly advance transparency in the digital life of Indians, it is imperative that all SSMIs disclose, in their compliance reports, issue-wise government requests for content removal on their platforms; something that has been done by ShareChat without providing the issue-wise breakup of requests from law enforcement authorities and investigation agencies.

Stay tuned! We will be back next month with an analysis of the next set of reports.

Important Documents

- WhatsApp’s IT Rules compliance report (link)

- Google’s IT Rules compliance report (link)

- Facebook’s and Instagram’s IT Rules compliance report (link)

- Twitter’s transparency report (link)

- ShareChat’s IT Rules compliance report (link)

- LinkedIn’s Government Requests Report (link)

- Snap’s Transparency Report for July 1 to December 31, 2020 (link)